EndoLoc

In-vivo Monocular Visual Localization of Endoscope

Duration: 2024.3-2025.4

Related Publications: PRICAI’24 (Shao et al., 2024), ICRA’25 (Shao et al., 2025), TII’25 (Shao et al., 2025), TCSVT (In Revision)

Funded by: Key Research and Development Plan of Ningxia Hui Autonomous Region (Grant No. 2023BEG03043 & 2023BEG02035), National Natural Science Foundation of China (No.82472116), Natural Science Foundation of Shanghai (No.24ZR1404100)

Background

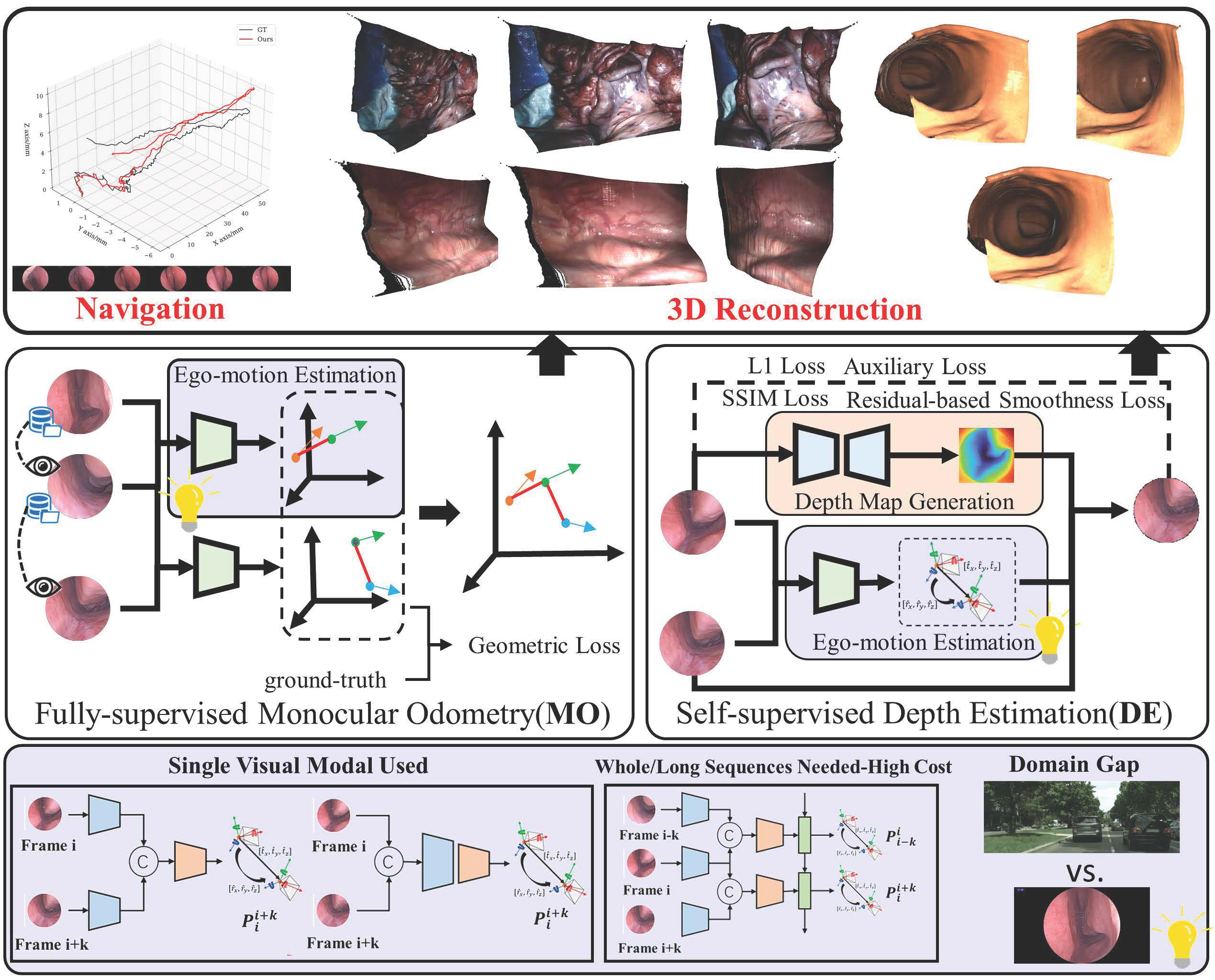

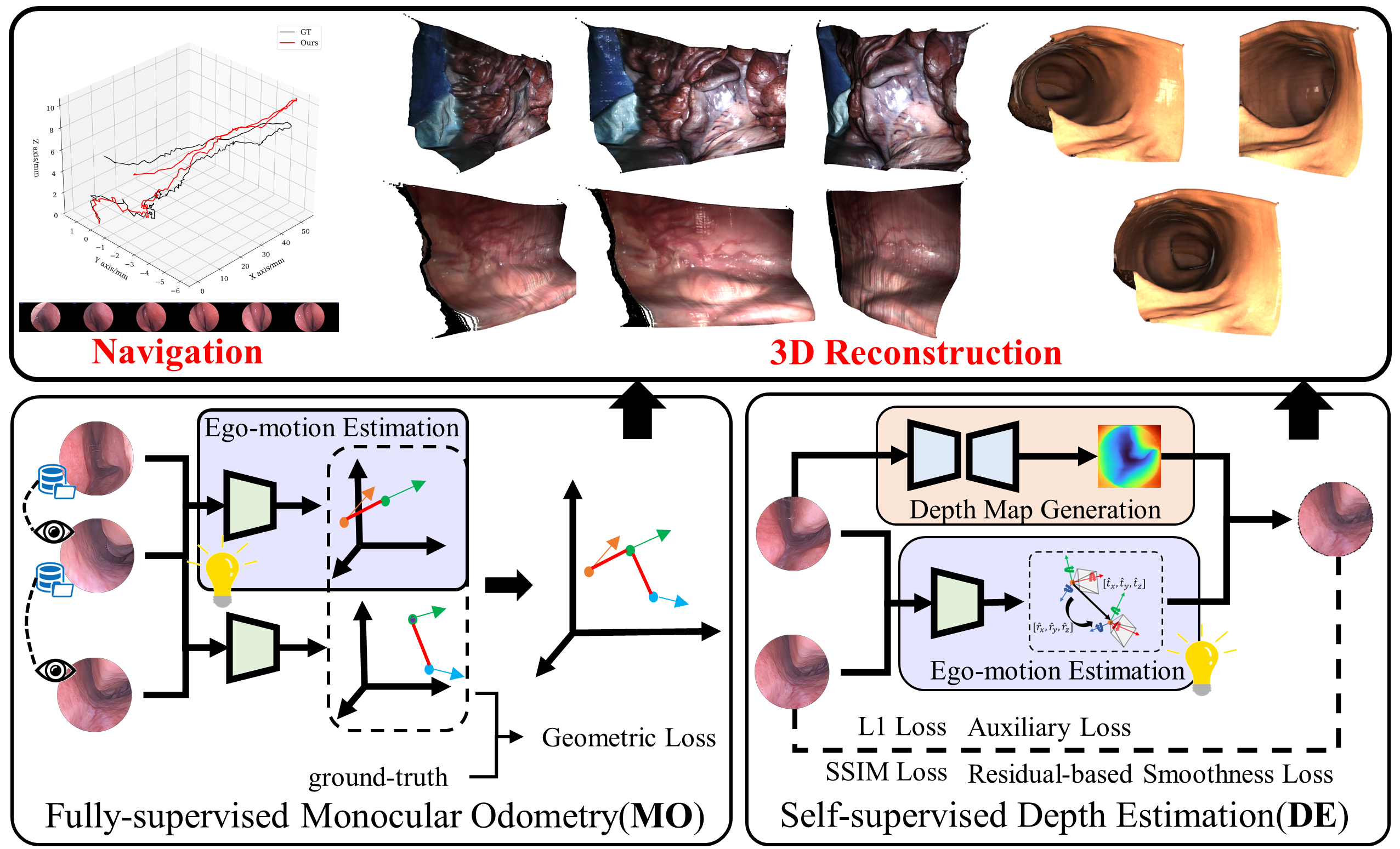

Real-time localization of endoscope is significant for the navigation and automation of endoscopic diagnosis and minimally invasive surgery.

However, traditional localization based on optical tracking or magnetic tracking is easily influenced by occlusion or electromagnetic instruments in the medical scenes, while the implementation is complicated and high-cost.

Our Work

In this project, several topics listed below are explored:

- The effect of transformation/Motion feartures from estimated optical flow.

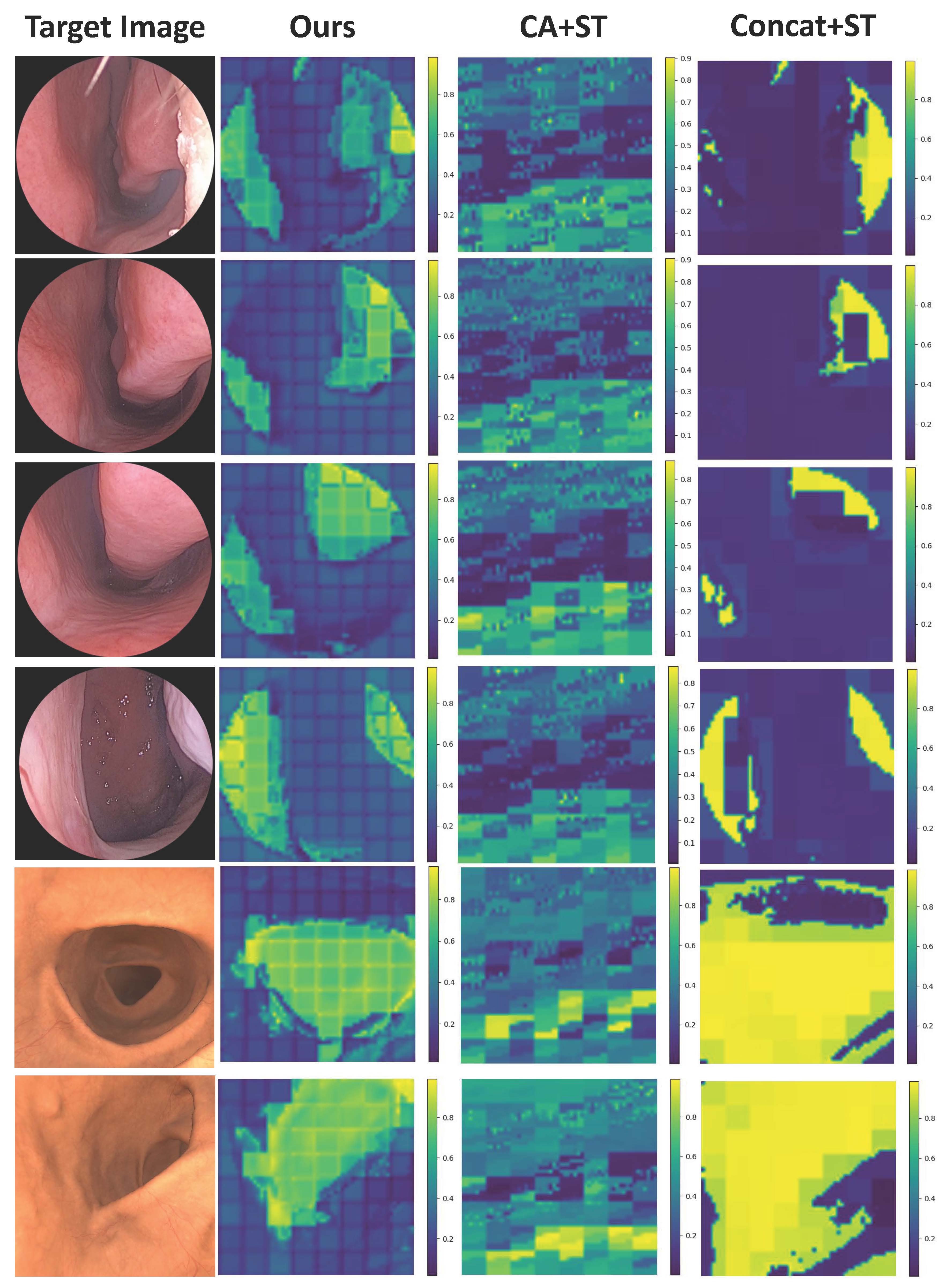

- How to extract more and better correlation features from endoscopic image?

- How a pose regressor can extract more representation from the concatenated feature map with much more channels?

- More feature source from limited vision of endoscope.

- The application in self-supervised depth estimation and 3D reconstruction.

We propose:

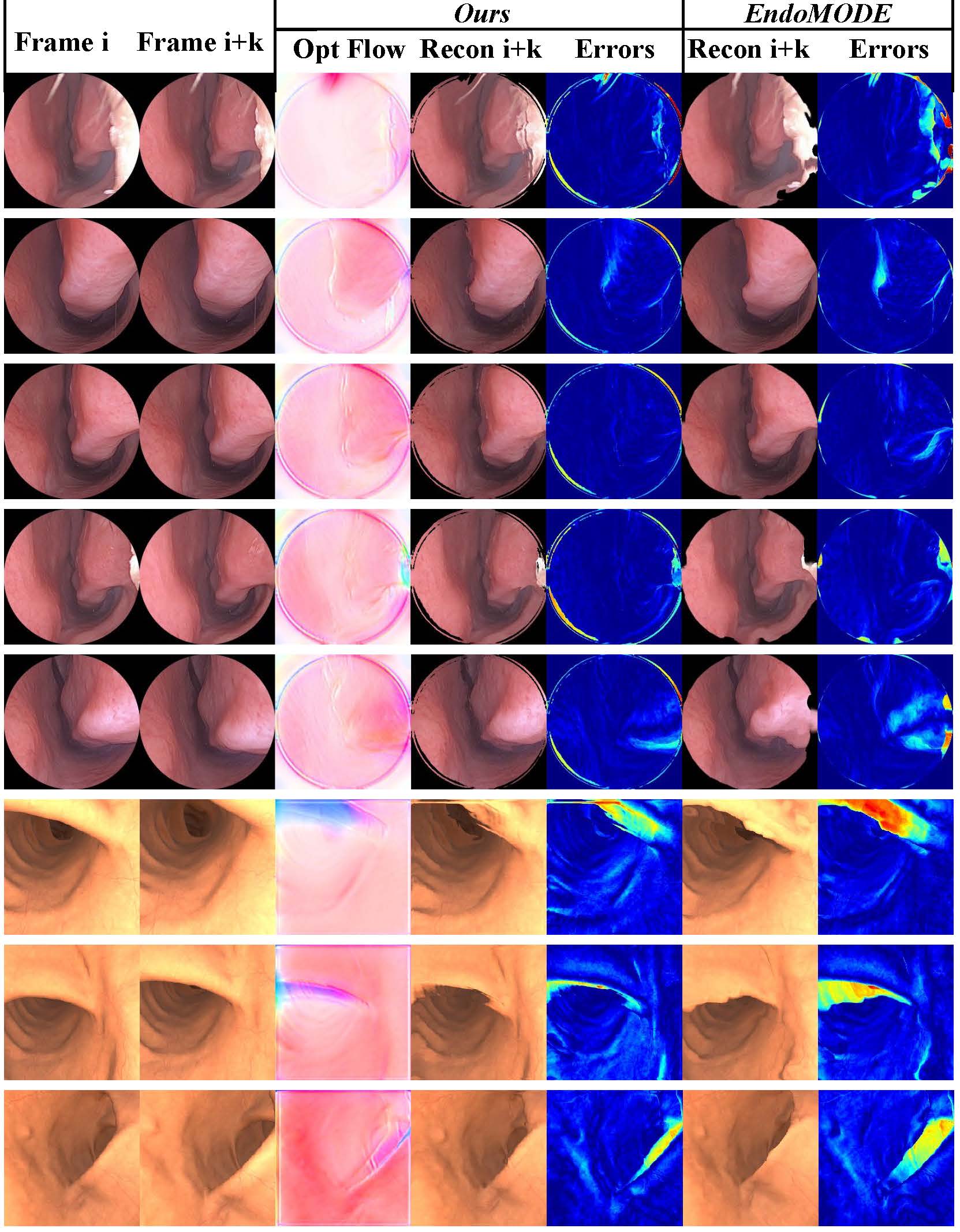

- A novel framework integrating multiple features, including transformation features from optical flow, from endoscopic observations for relative pose regression.

- A novel cross attention-based correlation module which extract more correlation features from local to global in two continuous frames.

- A novel pose regressor to extract more feature representation from the channel dimension.

- A novel feature encoder which can be stably trained from scratch on endoscopic data due to the domain gap.

Achievements

- Real-time accurate monocular visual localization of endoscopic in diverse endoscopic scenes with relatively low cost.

- Publications: PRICAI’24 (Shao et al., 2024), ICRA’25 (Shao et al., 2025), TII’25 (Shao et al., 2025), TCSVT (In Revision)

References

2025

- IEEE TII

EndoMODE: A Multi-modal Visual Feature-based Ego-motion Estimation Framework for Monocular Odometry and Depth Estimation in Various Endoscopic ScenesIEEE Transactions on Industrial Informatics, 2025

EndoMODE: A Multi-modal Visual Feature-based Ego-motion Estimation Framework for Monocular Odometry and Depth Estimation in Various Endoscopic ScenesIEEE Transactions on Industrial Informatics, 2025

2024

- PRICAI 2024

NETrack: A Lightweight Attention-Based Network for Real-Time Pose Tracking of Nasal Endoscope Based on Endoscopic ImageIn 2024 Pacific Rim International Conference on Artificial Intelligence (PRICAI), 2024

NETrack: A Lightweight Attention-Based Network for Real-Time Pose Tracking of Nasal Endoscope Based on Endoscopic ImageIn 2024 Pacific Rim International Conference on Artificial Intelligence (PRICAI), 2024