EndoGeDE

Generalizable Self-supervised Monocular Depth Estimation in Diverse Endoscopic Scenes

Duration: 2024.10-Now

Related Publications: IROS’25 Oral (Shao et al., 2025), EAAI (Submitted)

Funded by: National Natural Science Foundation of China (No.82472116), Natural Science Foundation of Shanghai (No.24ZR1404100)

Brief Background

Self-supervised monocular depth estimation is a significant task for low-cost and efficient three-dimensional scene perception in endoscopy.

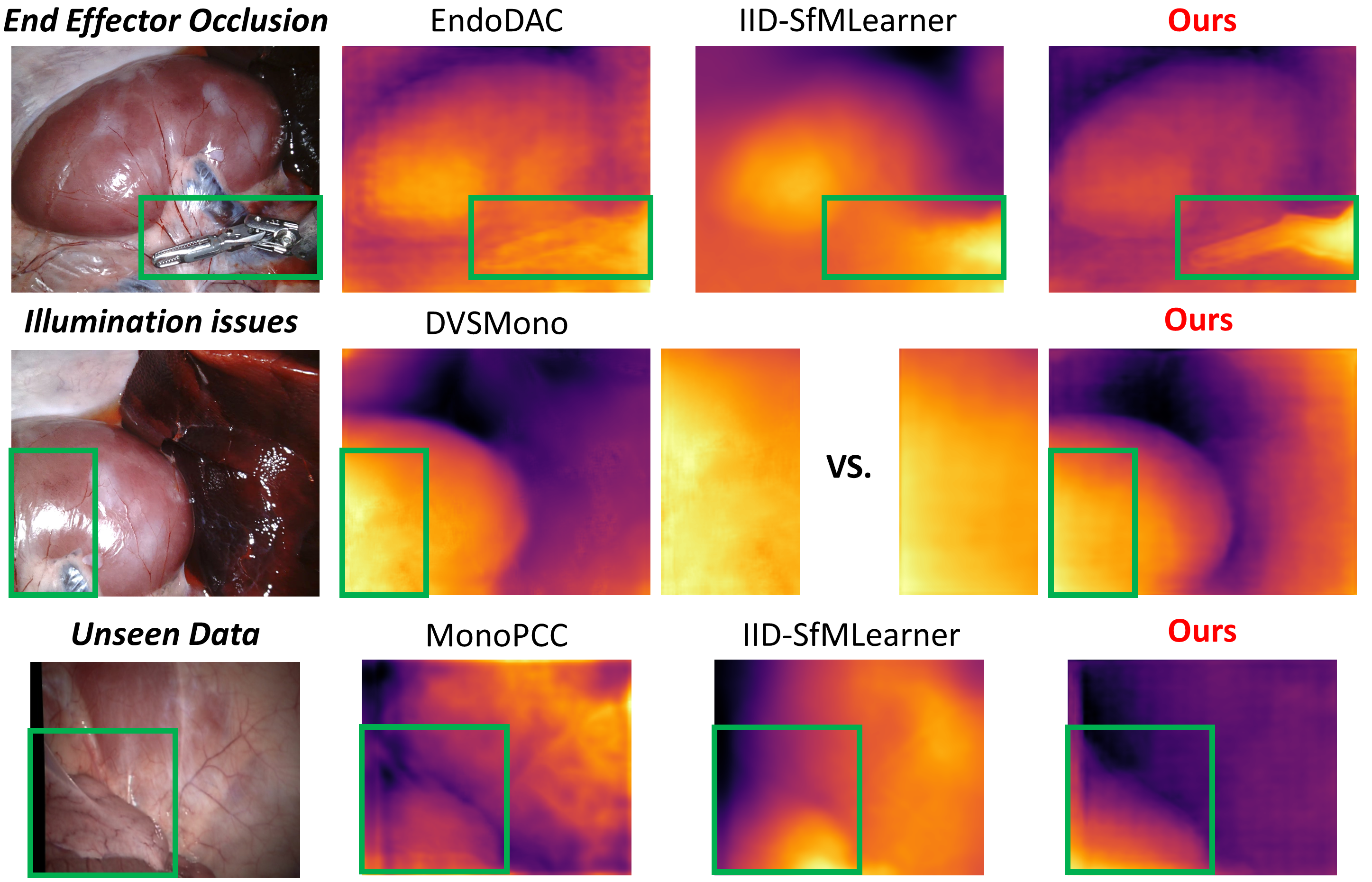

The variety of illumination conditions and scene features is still the primary challenge for generalizable depth estimation in endoscopic scenes.

Our Work

In this project, several topics listed below are explored:

- The parameter-efficient finetuning for generalizable foundation model.

- How to tackle the inconsistency and diversity of endoscopic scenes?

- The application of the framework in ego-motion estimation and 3D reconstruction.

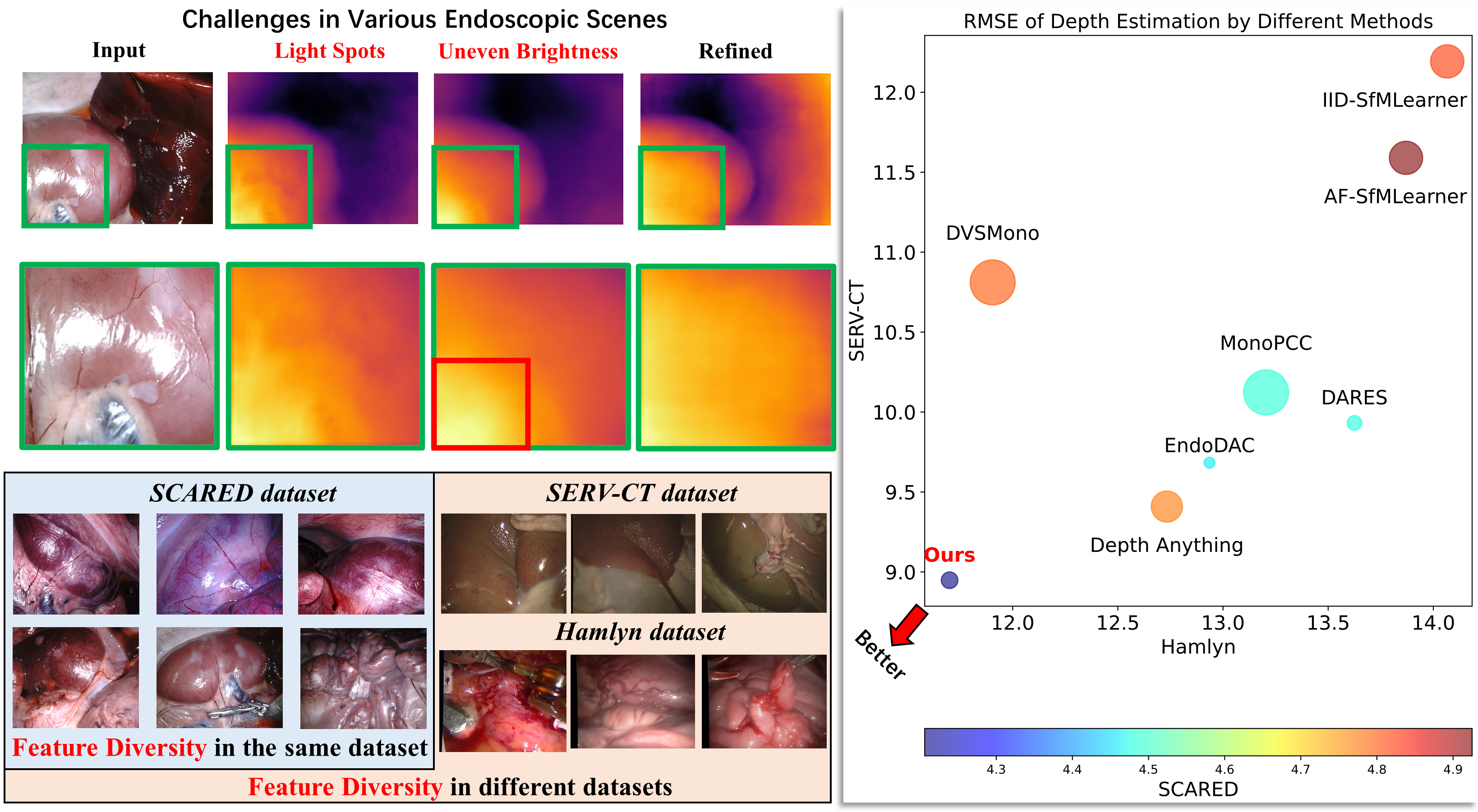

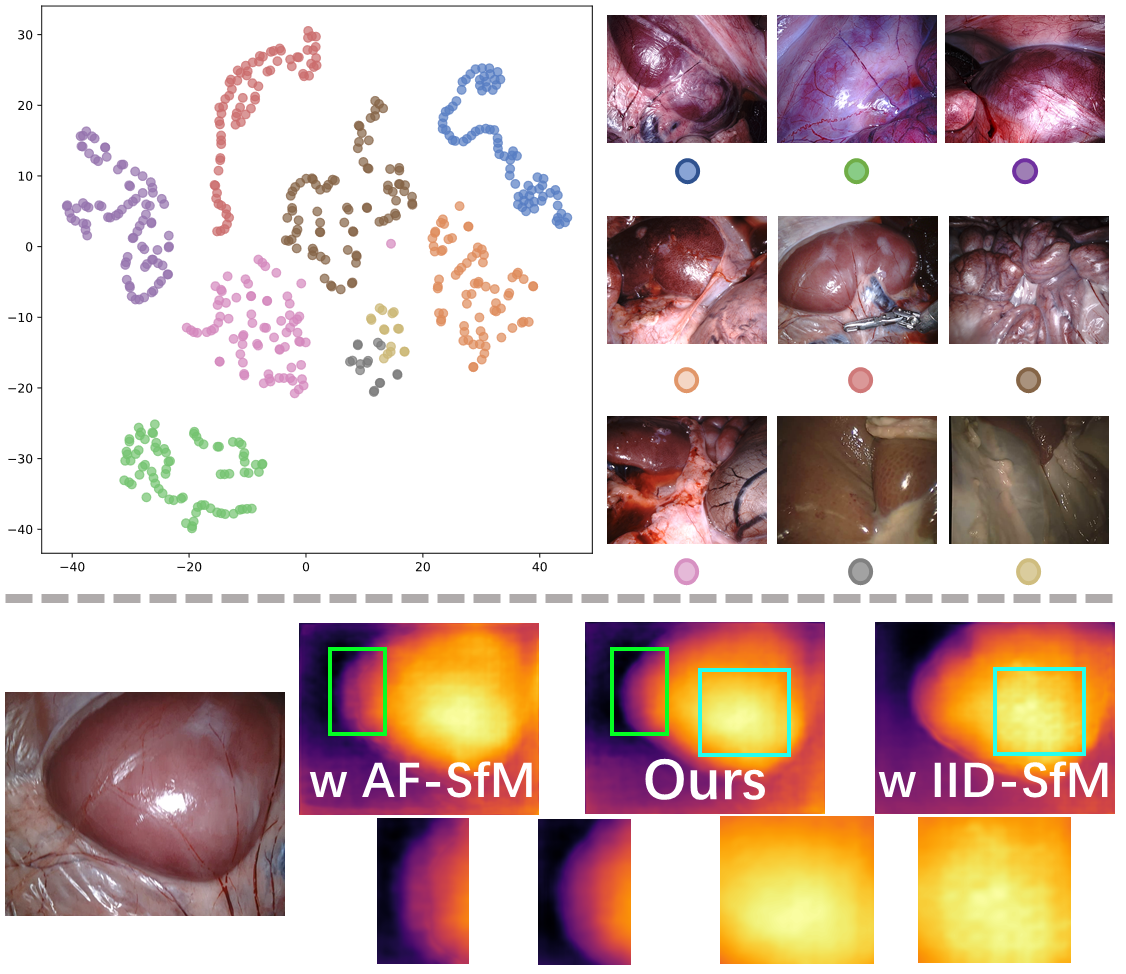

Challenges (left) and performance comparison (right) for depth estimation in diverse endoscopic scenes. The size of the circle denotes the number of trainable parameters in the corresponding depth estimation network.

We propose:

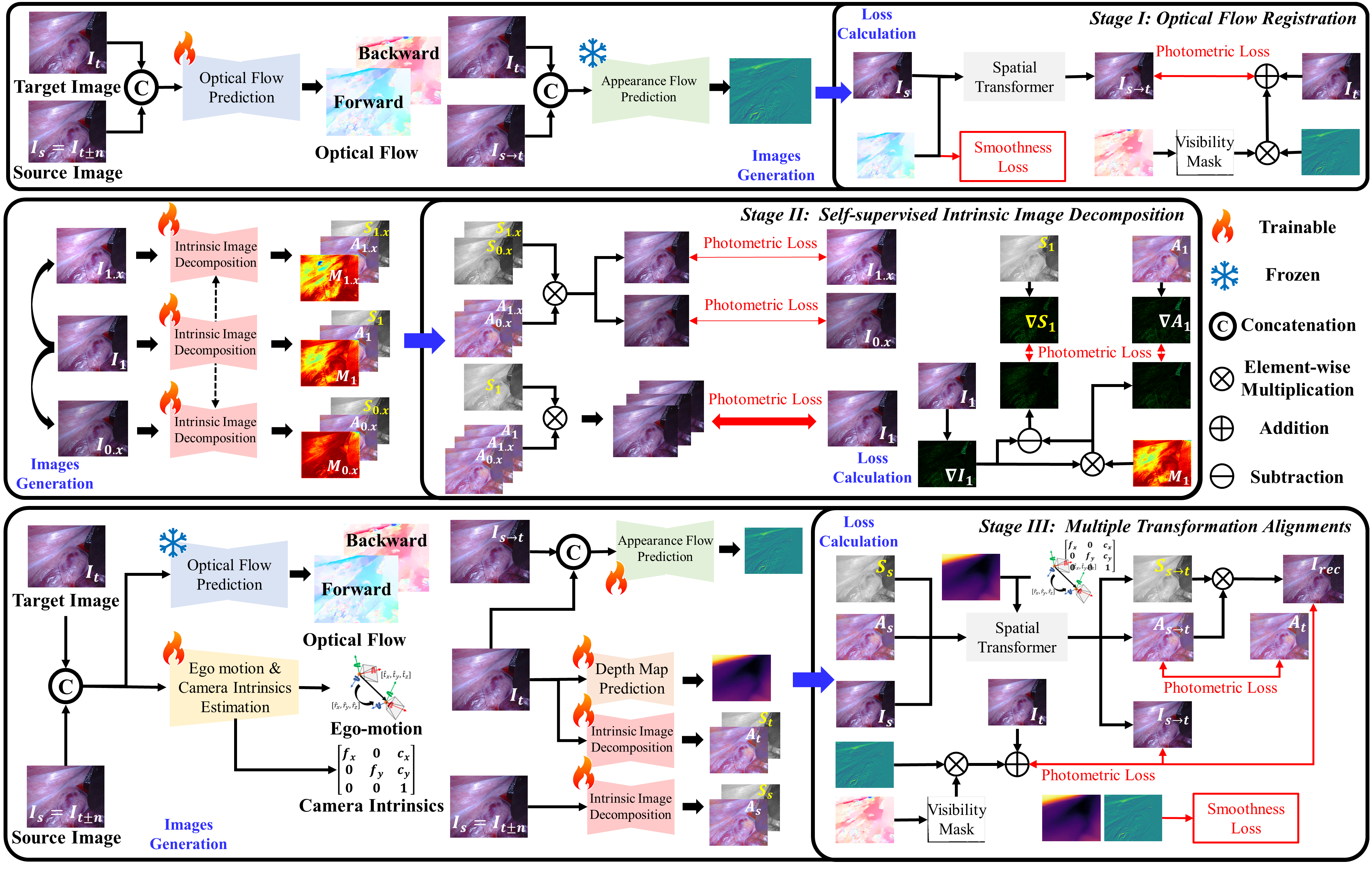

- A novel self-supervised framework for depth estimation is proposed to jointly deal with the inconsistency of brightness and reflectance in endoscopic scenes

- A novel parameter-efficient finetuning method with block-wise mixture of dynamic low-rank experts is proposed to adaptively utilize the low-rank experts for depth estimation based on diverse features

Left: The proposed multi-stage self-supervised framework. Right: The proposed parameter-efficient finetuning method.

Achievements

- The proposed method achieves state-of-the-art(SOTA) performance for self-supervised depth estimation on SCARED and SimCol dataset, as well as zero-shot depth estimation on C3VD, Hamlyn and SERV-CT dataset, compared with existing works.

- The proposed method is applied to sim-to-real test on EndoMapper dataset.

- The proposed method is compared with SOTA methods for 3D Reconstruction on SCARED dataset.

- Publications: IROS’25 Oral (Shao et al., 2025), EAAI (Submitted)

Demo Videos of Depth Estimation in Diverse Endoscopy.

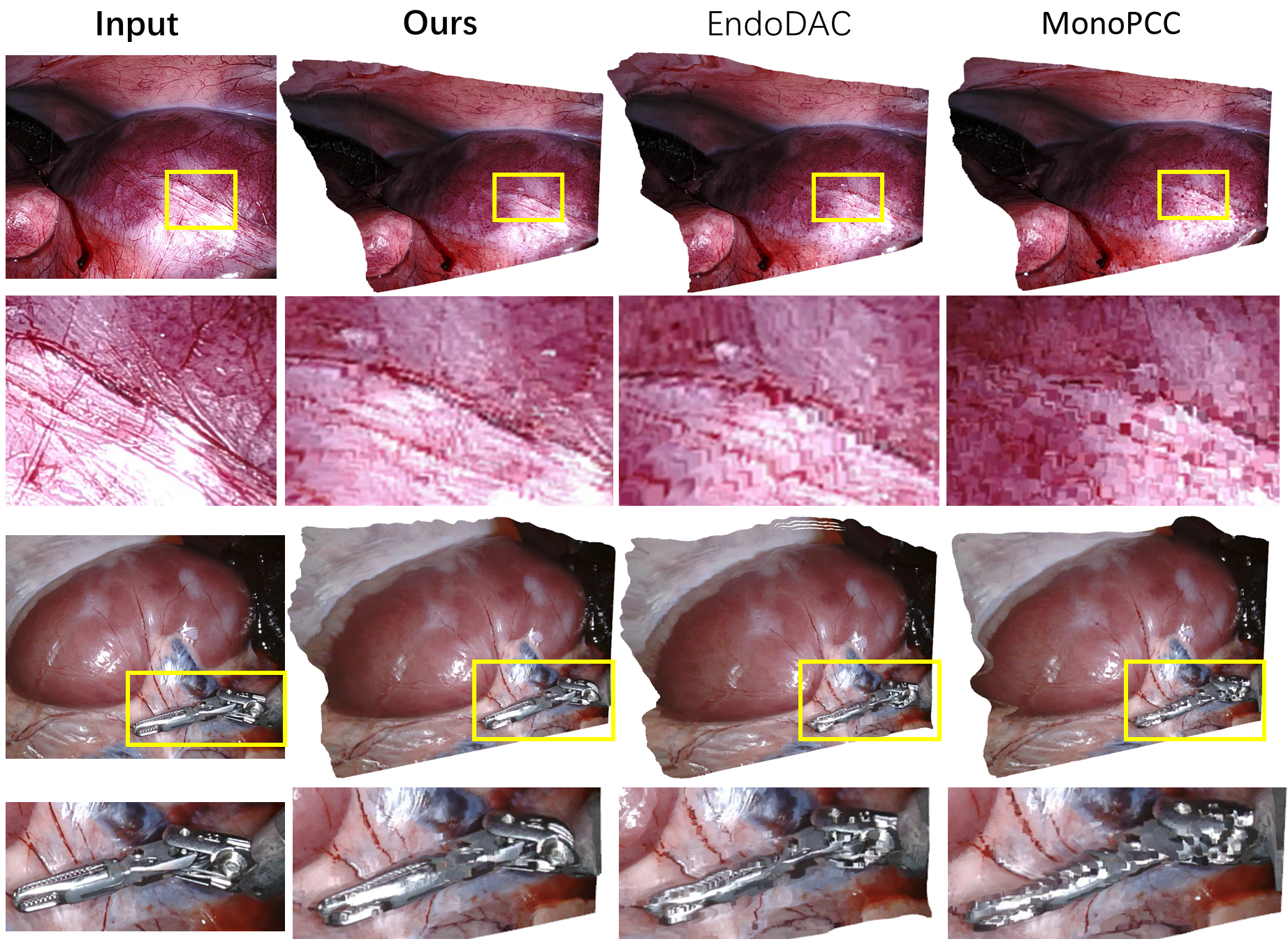

Comparison of 3D Reconstruction on Samples from SCARED dataset.

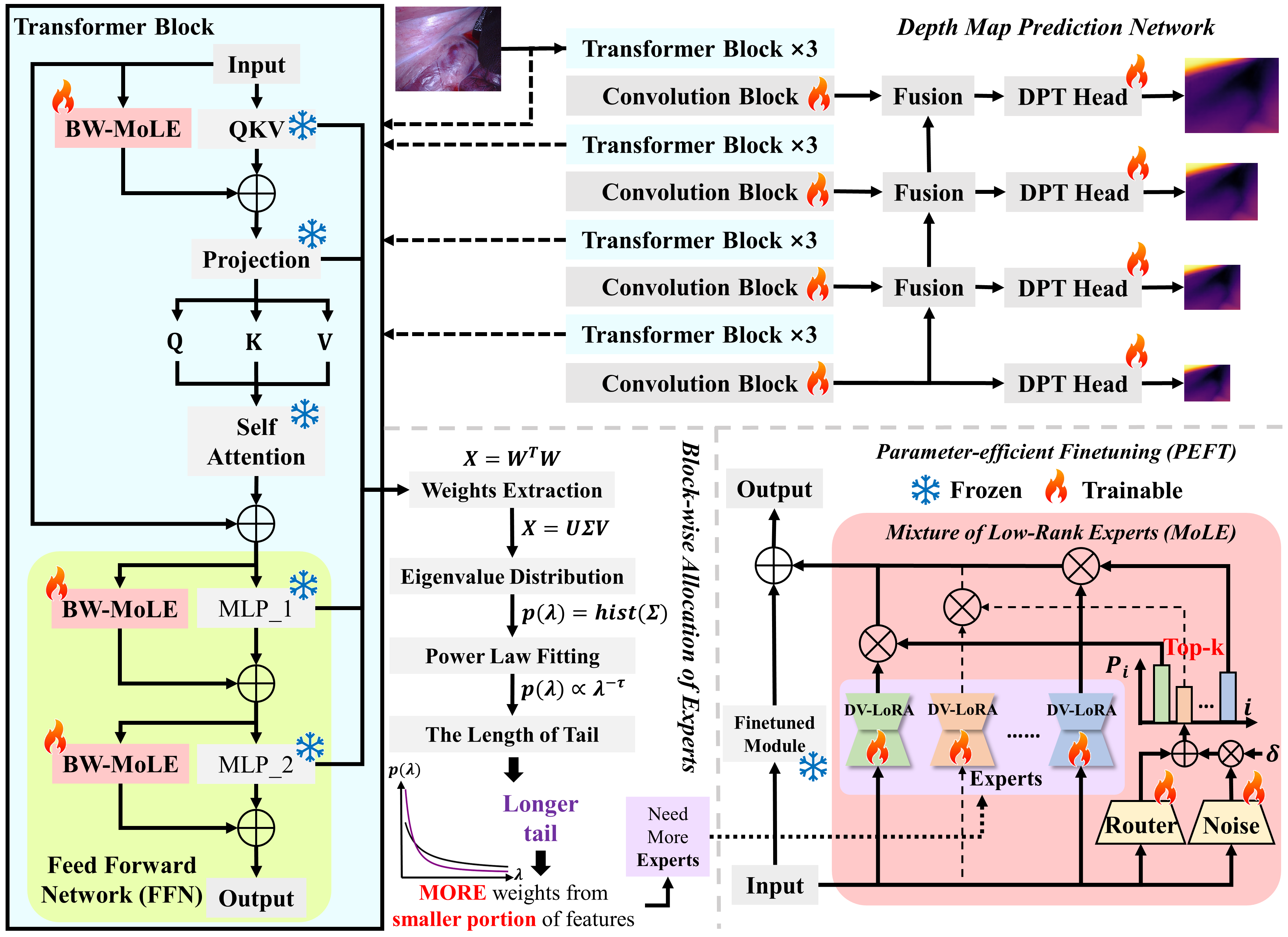

Top: Visualization of the experts' weights based on t-SNE. Bottom: Qualitative comparison of results with different SSL pipelines.

References

2025

- IROS 2025

EndoMUST: Monocular Depth Estimation for Robotic Endoscopy via End-to-end Multi-step Self-supervised TrainingIn IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2025

EndoMUST: Monocular Depth Estimation for Robotic Endoscopy via End-to-end Multi-step Self-supervised TrainingIn IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2025